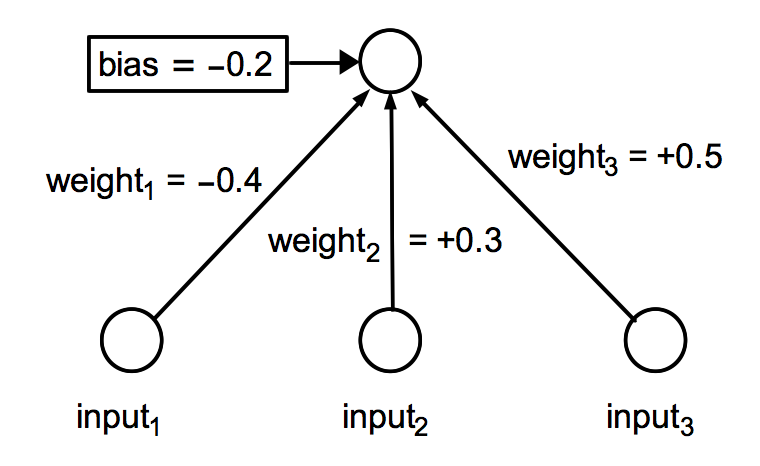

Recall that a binary threshold neuron, also known as a perceptron, multiplies each of its binary inputs by their respective weight values, and adds up the results. The neuron's bias value is then added to this to produce a final sum S, which represents the total amount of excitatory and inhibitory activation received by the neuron. This sum is then compared to 0, and the output of the neuron is 1 if S ≥ 0, or 0 if S < 0. The neuron's bias and weight values can be any positive or negative numbers, but the output is always either 0 or 1. A three-input perceptron is shown below:

For each of the following input patterns, what will the output of the above neuron be? Show your work, including the sum S in each case.

- 1 0 0

- 1 0 1

- 1 1 1

In the 1940s, Warren McCulloch and Walter Pitts showed that simple binary threshold neurons could compute the logical functions AND, OR, and NOT:

AND OR NOT

Inputs Output Inputs Output Inputs Output

0 0 0 0 0 0 0 1

0 1 0 0 1 1 1 0

1 0 0 1 0 1

1 1 1 1 1 1

For example, we can use a one-input neuron with weight1 = -1.0 and bias = 0.5 to compute the NOT function. In principle, it is possible to compute any logical function whatsoever by combining AND, OR, and NOT into larger circuits. Together, AND, OR, and NOT are said to be computationally universal. Thus the work by McCulloch and Pitts showed that large networks containing many binary threshold neurons were capable in principle of universal computation, like Turing machines.

For a two-input neuron with a negative bias of -0.5, give values for weight1 and weight2 that will make the neuron behave like the OR function. Show the value of the sum S for each of the four input cases.

Is it possible for a neuron with a positive bias to compute OR? If yes, give specific weight and bias values that work. If no, explain clearly why not.

Suppose we want the neuron from question 1 above (starting with the weights and bias as shown) to produce an output of 1 in response to the input pattern 1 1 0. Using a learning rate of ε = 0.3, compute the new weight and bias values that result after applying the Perceptron Training Procedure to this input pattern for one step. Do the new values result in the correct output? Show your work clearly.

Suppose we want the neuron from question 1 to produce an output of 0 in response to the input pattern 0 1 1. Assuming a learning rate of ε = 0.1, use the Perceptron Training Procedure to compute a new set of weight/bias values that will produce the correct output. Fill in the table below for as many steps as needed, showing the new weight/bias values computed at each step. Note: you may not necessarily need to fill in all rows of the table.

| Step # | weight1 | weight2 | weight3 | bias | Output |

|---|---|---|---|---|---|

| 0 | -0.4 | +0.3 | +0.5 | -0.2 | 1 |

| 1 | |||||

| 2 | |||||

| 3 | |||||

| 4 |