Bio-Inspired Artificial Intelligence — Spring 2023

Assignment 5: The Backpropagation Learning Algorithm

Due by class time Tuesday, April 18

In this assignment, you will implement a basic version of the

backpropagation learning algorithm for a neural network with 2 inputs, 2

hidden units, and 1 output unit, and then use it to learn simple logical

functions such as AND and XOR. As a

reminder, here is a summary of the

mathematical details of the algorithm.

To get started, download and unzip the

folder assign5.zip, which contains the

starting code and an automated tester program, which you can use to verify

that your methods work correctly.

Part 1: Basic Implementation

Implement the NeuralNetwork method propagate(self,

pattern). This method should take a length-2 input pattern such

as [1,0] and return the output produced by the network in

response. As a side effect, the NeuralNetwork's instance

variables self.hidden1,

self.hidden2, and self.output should be set to the

newly-computed activation values of the hidden and output units.

Next, implement the method total_error(self, patterns,

targets). This method should take a list of input patterns and a

list of their corresponding target values, and return a pair of

numbers (as a tuple): (1) the total-sum-squared (TSS) error of the network

with respect to the current weights and given targets, and (2) the fraction

of output values that are correct (that is, within self.tolerance

of the given target values), expressed as a number in the range 0-1. For

example, if patterns is a list of the four binary

patterns [0,0], [0,1], [1,0],

[1,1], and the network produces outputs that correctly match the

targets for three of the four patterns, the fraction of correct outputs

would be 0.75. The TSS error is similar to the "quadratic cost" function

defined in the backpropagation

derivation we went over in class, but does not include the extra

factors of 1/2 or 1/n.

Next, implement the method adjust_weights(self, pattern,

target). This method should take a single input pattern and its

corresponding target value, and update all of the weights and biases in the

network, according to the backpropagation learning algorithm.

Next, implement the method train(self, patterns,

targets). This method should perform training epochs repeatedly

until the fraction of correct outputs equals 1.0. A training epoch

consists of a single pass through all of the patterns and targets in the

given dataset, in some order (possibly randomized). However,

if self.epoch_limit epochs are reached before all of the patterns

are learned to within tolerance, the training loop should terminate with the

warning message "Failed to learn targets within E epochs",

where E is the epoch limit. For each epoch, the epoch number, TSS

error, and fraction of correct outputs should be reported, as in the example

output shown below:

>>> n.train(inputs, XORtargets)

Epoch # 0: TSS error = 1.00399, correct = 0.000

Epoch # 1: TSS error = 1.00349, correct = 0.000

Epoch # 2: TSS error = 1.00221, correct = 0.000

Epoch # 3: TSS error = 1.00102, correct = 0.000

Epoch # 4: TSS error = 1.00031, correct = 0.000

Epoch # 5: TSS error = 1.00004, correct = 0.000

...

Automated Tester Program

I have included an automated tester program called NNinspector.py,

which you should use to verify that your methods are working correctly.

Make sure that this file is in the same folder as your assign5.py file, and

then type the command NNinspector.part1() at the

Python prompt to test your methods from Part 1. The tester will test your

NeuralNetwork methods in order. It will also test the ability of your

network to learn XOR, using two different sets of initial weight values. If

it detects a problem, it will immediately stop and report which method is at

fault (and will try to explain what caused the problem as best it can).

Part 2: Adding Momentum

To add support for momentum, your network will need to keep track of the

amount that each weight and bias changed on the previous time step, so that

the adjust_weights method can calculate the weight updates correctly.

Add the following instance variables to the __init__(self)

constructor of your NeuralNetwork class:

self.output_bias_change = 0

self.hidden1_bias_change = 0

self.hidden2_bias_change = 0

self.output_w1_change = 0

self.output_w2_change = 0

self.hidden1_w1_change = 0

self.hidden1_w2_change = 0

self.hidden2_w1_change = 0

self.hidden2_w2_change = 0

Also add the above lines to the set_weights(self,

weight_list) method, so that any residual weight-change information

will get reset to zero whenever a new set of weights is installed in the

network. This method is mainly used by the NNinspector for testing

purposes.

Modify your method adjust_weights(self, pattern, target)

to update the weights and biases based on the momentum parameter

self.momentum, using the above instance variables to keep track of

the amount that each weight and bias changed on the previous time step.

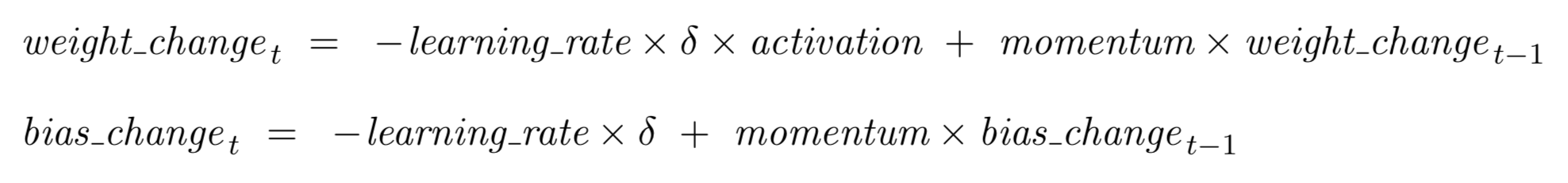

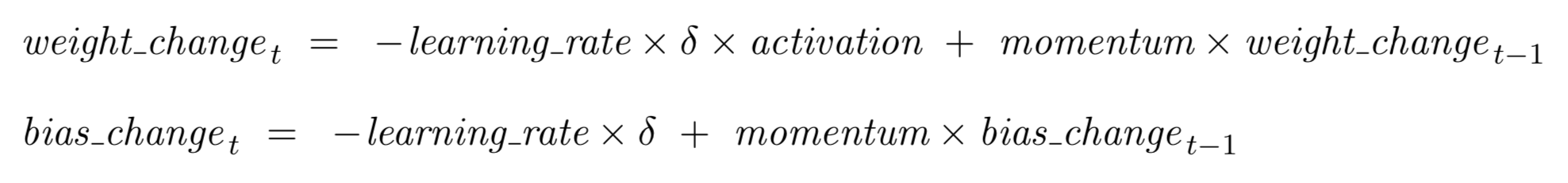

Your other NeuralNetwork methods do not need to be modified. As we

discussed in class, the rules for calculating the change to each weight

and bias at time t, based on the previous change at

time t–1, are:

To test your network with momentum, type NNinspector.part2()

at the Python prompt.

Part 3: Experiments

Once your neural network passes all of the NNinspector's tests, try training

it on various logical functions such as AND, OR, NAND, NOR, and XNOR. Then

run the following experiments. For each experiment, first set the learning

rate and momentum to the desired values. Then train your network five times

on the desired targets, starting from new randomized weights each time (by

calling the initialize method, as shown in the example below), and

record the number of training epochs required to fully learn the training

task to within a tolerance of 0.1. What combination of parameter settings

seems to give the best overall performance? How sensitive is the learning

process to changes in the parameter values? Feel free to try other

combinations of parameter settings or target functions, or other experiments

if you wish.

>>> n.learning_rate = 0.7

>>> n.momentum = 0.5

>>> n.initialize(); n.train(inputs, ANDtargets)

| Experiment |

Targets |

Learning rate |

Momentum |

Average # of epochs

(over 5 trials) |

| #1 | ANDtargets | 0.1 | 0 | |

| #2 | ANDtargets | 0.5 | 0 | |

| #3 | ANDtargets | 0.7 | 0 | |

| #4 | ANDtargets | 0.7 | 0.5 | |

| #5 | ANDtargets | 0.7 | 0.9 | |

| |

| #6 | XORtargets | 0.1 | 0 | |

| #7 | XORtargets | 0.5 | 0 | |

| #8 | XORtargets | 0.7 | 0 | |

| #9 | XORtargets | 0.7 | 0.5 | |

| #10 | XORtargets | 0.7 | 0.9 | |

What to Turn In

Submit your completed assign5.py file containing your working

NeuralNetwork class definition, and a short written report in PDF format

describing your learning results and the experiments you tried (including your

table of results from Part 3 above), using

the Homework Upload Site.

If you have questions about anything, don't hesitate to ask!